A developer is running Spark SQL queries and notices underutilization of resources. Executors are idle, and the number of tasks per stage is low.

What should the developer do to improve cluster utilization?

A data engineer is running a Spark job to process a dataset of 1 TB stored in distributed storage. The cluster has 10 nodes, each with 16 CPUs. Spark UI shows:

Low number of Active Tasks

Many tasks complete in milliseconds

Fewer tasks than available CPUs

Which approach should be used to adjust the partitioning for optimal resource allocation?

A data engineer is streaming data from Kafka and requires:

Minimal latency

Exactly-once processing guarantees

Which trigger mode should be used?

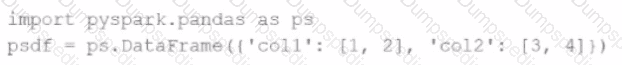

Given the code fragment:

import pyspark.pandas as ps

psdf = ps.DataFrame({'col1': [1, 2], 'col2': [3, 4]})

Which method is used to convert a Pandas API on Spark DataFrame (pyspark.pandas.DataFrame) into a standard PySpark DataFrame (pyspark.sql.DataFrame)?

A developer notices that all the post-shuffle partitions in a dataset are smaller than the value set forspark.sql.adaptive.maxShuffledHashJoinLocalMapThreshold.

Which type of join will Adaptive Query Execution (AQE) choose in this case?

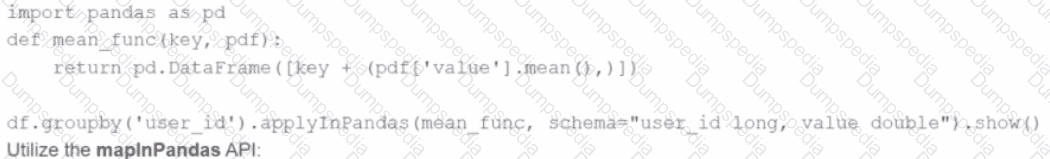

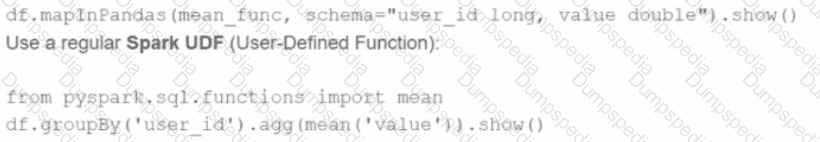

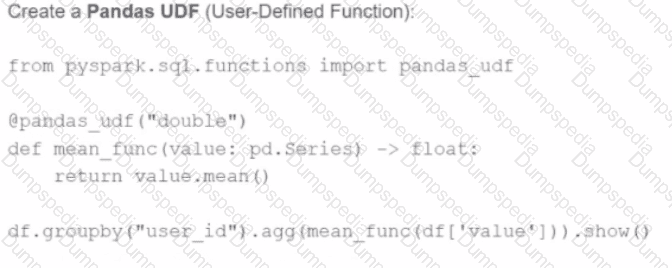

A developer is working with a pandas DataFrame containing user behavior data from a web application.

Which approach should be used for executing agroupByoperation in parallel across all workers in Apache Spark 3.5?

A)

Use the applylnPandas API

B)

C)

D)

An engineer notices a significant increase in the job execution time during the execution of a Spark job. After some investigation, the engineer decides to check the logs produced by the Executors.

How should the engineer retrieve the Executor logs to diagnose performance issues in the Spark application?

A data scientist is working with a Spark DataFrame called customerDF that contains customer information.The DataFrame has a column named email with customer email addresses. The data scientist needs to split this column into username and domain parts.

Which code snippet splits the email column into username and domain columns?

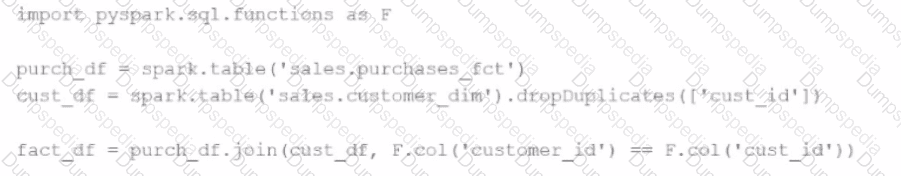

A developer is trying to join two tables,sales.purchases_fctandsales.customer_dim, using the following code:

fact_df = purch_df.join(cust_df, F.col('customer_id') == F.col('custid'))

The developer has discovered that customers in thepurchases_fcttable that do not exist in thecustomer_dimtable are being dropped from the joined table.

Which change should be made to the code to stop these customer records from being dropped?

What is the relationship between jobs, stages, and tasks during execution in Apache Spark?

Options:

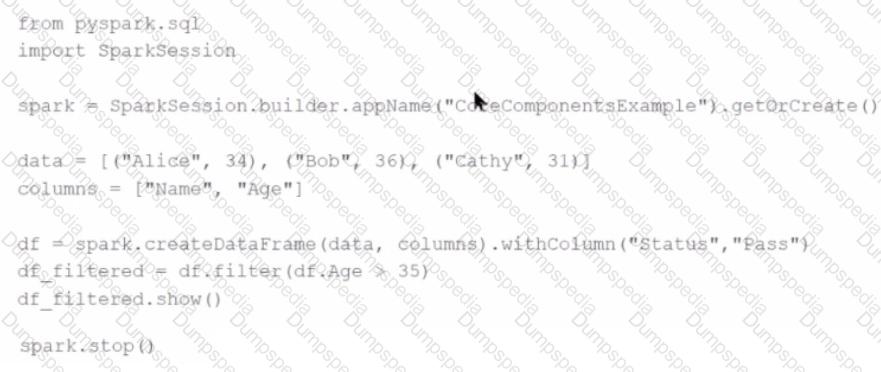

Given the following code snippet inmy_spark_app.py:

What is the role of the driver node?

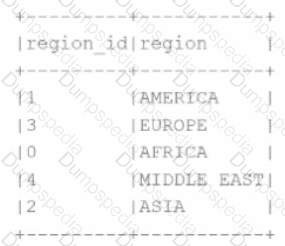

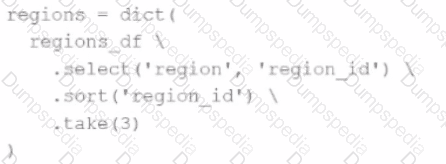

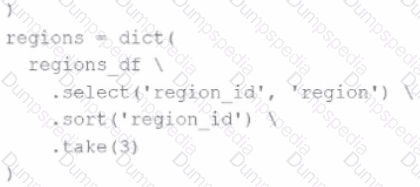

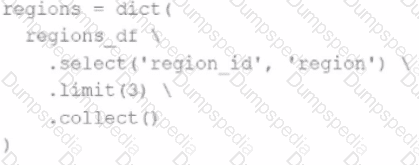

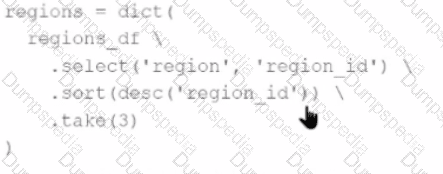

A developer needs to produce a Python dictionary using data stored in a small Parquet table, which looks like this:

The resulting Python dictionary must contain a mapping of region-> region id containing the smallest 3 region_idvalues.

Which code fragment meets the requirements?

A)

B)

C)

D)

The resulting Python dictionary must contain a mapping ofregion -> region_idfor the smallest 3region_idvalues.

Which code fragment meets the requirements?

A Spark engineer is troubleshooting a Spark application that has been encountering out-of-memory errors during execution. By reviewing the Spark driver logs, the engineer notices multiple "GC overhead limit exceeded" messages.

Which action should the engineer take to resolve this issue?

A data engineer noticed improved performance after upgrading from Spark 3.0 to Spark 3.5. The engineer found that Adaptive Query Execution (AQE) was enabled.

Which operation is AQE implementing to improve performance?

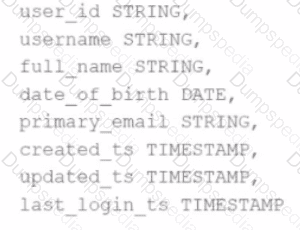

A data scientist has identified that some records in the user profile table contain null values in any of the fields, and such records should be removed from the dataset before processing. The schema includes fields like user_id, username, date_of_birth, created_ts, etc.

The schema of the user profile table looks like this:

Which block of Spark code can be used to achieve this requirement?

Options:

An engineer has a large ORC file located at/file/test_data.orcand wants to read only specific columns to reduce memory usage.

Which code fragment will select the columns, i.e.,col1,col2, during the reading process?

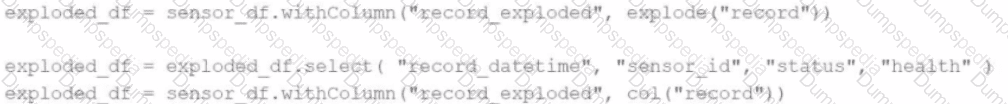

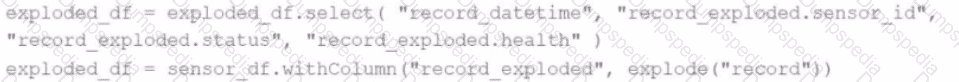

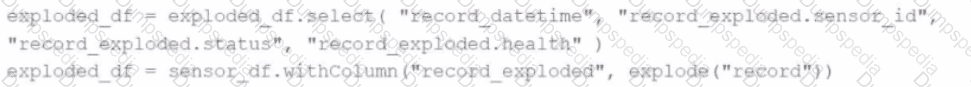

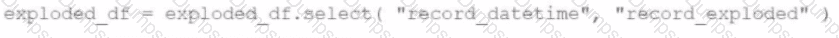

A Data Analyst is working on the DataFramesensor_df, which contains two columns:

Which code fragment returns a DataFrame that splits therecordcolumn into separate columns and has one array item per row?

A)

B)

C)

D)

A data scientist is working on a large dataset in Apache Spark using PySpark. The data scientist has a DataFramedfwith columnsuser_id,product_id, andpurchase_amountand needs to perform some operations on this data efficiently.

Which sequence of operations results in transformations that require a shuffle followed by transformations that do not?

A data scientist wants each record in the DataFrame to contain:

The first attempt at the code does read the text files but each record contains a single line. This code is shown below:

The entire contents of a file

The full file path

The issue: reading line-by-line rather than full text per file.

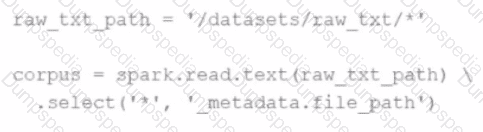

Code:

corpus = spark.read.text("/datasets/raw_txt/*") \

.select('*','_metadata.file_path')

Which change will ensure one record per file?

Options:

The following code fragment results in an error:

@F.udf(T.IntegerType())

def simple_udf(t: str) -> str:

return answer * 3.14159

Which code fragment should be used instead?